| Photo: Mitchell Clark |

Disclosure: DPReview is attending Adobe Max, with Adobe covering travel and lodging expenses.

Over the past few days at the Adobe Max conference, I’ve been able to talk with product managers from the Photoshop and Lightroom teams, and demo some new and in-the-works features that didn’t get much time, if any, in the keynote presentation. We’ll go over the most interesting ones here, and wrap up with some of the things I’d like to see in future releases.

The Lightroom Demos

Assisted Culling and Auto Stacks

|

| The tool lets you fine-tune most of the parameters. |

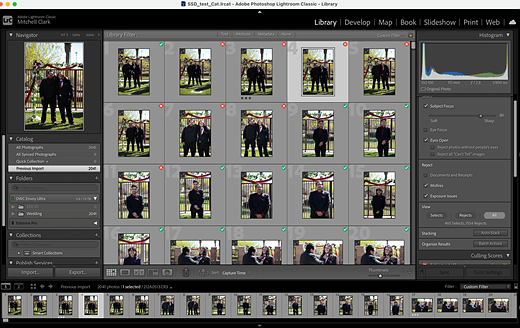

The headlining Lightroom feature this year is Assisted Culling, which is available as an “early access” feature in Lightroom and Lightroom Classic. We’ve gone more in-depth on it here, but essentially, it’s a way to quickly sort through dozens or hundreds of photos so you only see the ones that are exposed properly and in focus. There are controls to set how picky you want it to be, and you can easily mark photos the system rejected as selects, or vice versa.

The culling tool can be accessed either in your library or in the import window, depending on where you’re trying to use it.

|

Adobe has also updated the Stacks feature in Lightroom. While it has long been able to group photos by capture time, it can now do so based on visual similarity too (and, again, you can choose how similar photos have to be to be stacked together). Combining the two features lets you process a lot of photos very quickly. When you have your selects, you can run a batch process on both them and the rejects, applying colored labels, star ratings and/or flags, or adding them to an album.

The system generally works with both files in the cloud and on local files, though at the moment, you can only do visually similar stacking on local files in Lightroom Classic, not Lightroom.

Adobe says Assisted Culling is currently geared towards portrait photography, but that we can expect to see controls that make sense for other genres of photography in the future.

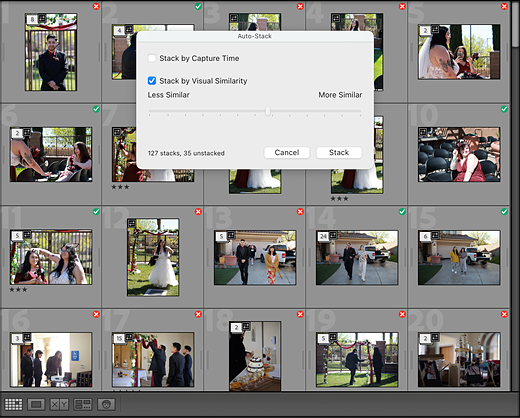

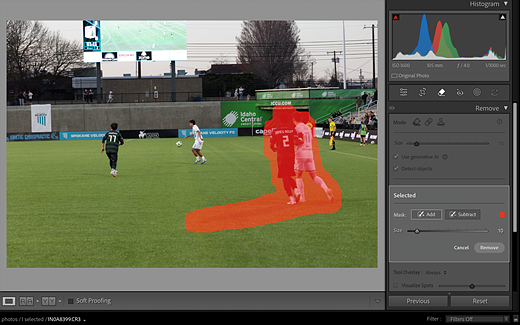

A better remove tool

|

| Note that this selection doesn’t include the player’s shadows. |

Adobe has also been hard at work on the remove tool, which photographers can use to automatically get rid of distracting elements in their photos. For one, it can now detect and delete dust spots from your sensor or lens, and can do so as a batch process. So, if you’ve just come back from a shoot and realized your sensor was dirty, you won’t have to go through each photo to fix them manually one by one.

The remove model also now supports smarter object detection. Previously, if you selected a person and asked Lightroom to remove them, it’d do it, but might leave their shadow or reflection. Worse, it might look at the image, see that there’s a shadow, and try to insert something into the selected area, defeating the point entirely. Now, if you select the Detect Objects box, it will automatically detect and remove shadows and reflections too, even if you’ve only selected the object.

|

| With the “Detect objects” option selected, Lightroom fully selected the players’ bodies and their shadows, even though I didn’t completely scribble over them. |

Adobe also says it’s updated the model used in its reflection removal tool, allowing it to handle more complex situations.

While all the features we’ve talked about so far have been available on the desktop versions of Lightroom, there’s a new one coming exclusively to the Mobile and Web versions (for now): automatic blemish removal. It’s available as a Quick Action in the retouch menu, and, as with most Quick Actions, there’s a slider to control how strong you want the effect to be. Adobe says it’s one of the most requested features, but that it’s rolling it out in Early Access to get feedback before making it more widely available (it is messing with people’s faces, after all).

Smarter searching

Another feature that’s in beta is an improved search tool that’s much better at the semantic-type searching you may be familiar with using in cloud-based photo management tools from the likes of Apple and Google. While you’ve been able to search for things like “dog” or “person” for a while now, the improved search is much more granular. In the demo I saw, the presenter searched for “man standing at the end of a pier,” and it was able to find the photo he was looking for.

It’s currently only on Lightroom for the Web (which means it only works on photos you’ve uploaded to your account), and it has to do a scan of your library after you turn it on. While Adobe says it plans on bringing it to the desktop in the near-to-mid-future, you’ll currently have to go to your profile, then enable the Improved Search feature in the Technology Previews section to use it.

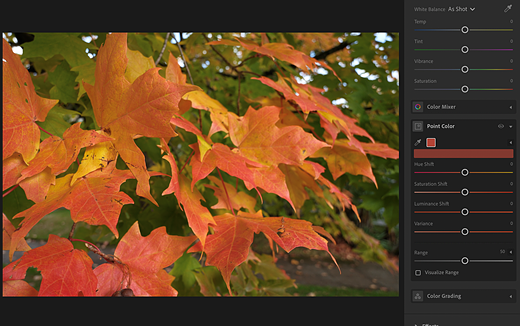

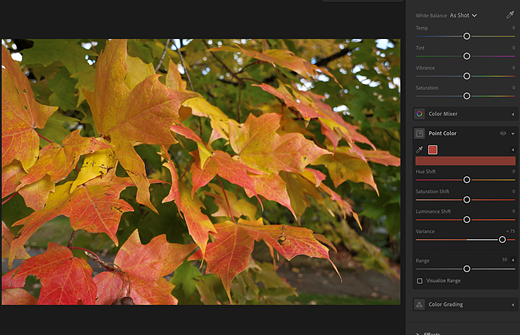

What the heck is color variance?

|

| The color variance slider, with no changes applied. Photo: Richard Butler |

If I’m being honest, when I originally wrote about Adobe adding a “variance” slider to the point color panel, I wasn’t completely sure what it did. After seeing it in action, though, I get it: it essentially lets you select a hue in your image, and then move similar hues either closer to it or further apart, removing or adding color contrast.

|

| Turning the slider up can (in this case, over-)emphasise the difference between similar hues in your image. |

The original use for it was for evening out skin tones, which helps demphasize blemishes without entirely smoothing someone’s face. However, it can also be used to emphasize subjects in your scene without having to resort to globally increasing contrast, saturation or clarity. I saw demos of it being used to make foliage, which had looked relatively monochromatic in the original picture, really pop, or to make the Taj Mahal stand out despite being behind a thick layer of smog.

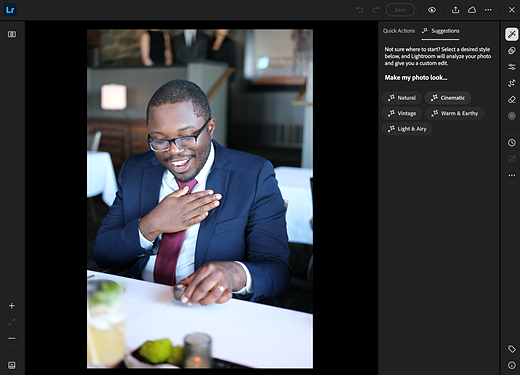

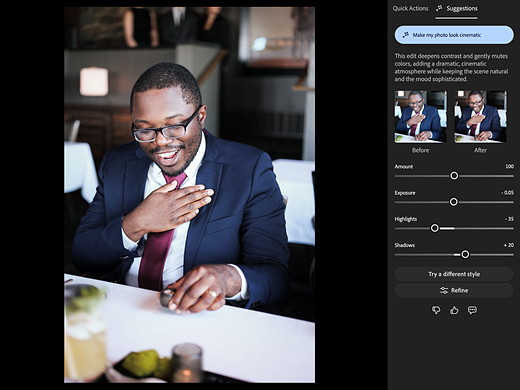

Agentic Editing

|

| Suggestions are like presets, but instead of preset changes, they analyze the actual image you’re applying them to. |

The Lightroom team also showed me a beta feature called Edit Suggestions, which they described as their first toe into the waters of “agentic style” editing. It’s a bit of a mix of the current Quick Actions found in Lightroom Mobile and Web and the Adaptive Profiles that analyze your photos to give you a better baseline for your edits (though the team tells me it doesn’t rely on the tech from the latter).

Essentially, it’s a tab that says “make my photo look” and then gives you several pre-made options, such as “vintage” or “cinematic.” It will then change a bunch of parameters, such as color mixing, grain settings, curves and, of course, the standard exposure sliders.

|

| Lightroom explains what changes it made, and of course lets you refine the tweaks it made. |

The team made it clear that these aren’t just presets, though; it won’t make the same exact changes each time. Instead, the adjustments it makes, and how far it takes them, will be based on the specific photo. Ask for the same thing on a different photo, and you’ll get different settings.

It’s easy to imagine a more open-ended version of this in the future, similar to the AI assistant that’s coming to Photoshop, though the team isn’t making any announcements in that direction yet.

The Photoshop demos

With Photoshop, I didn’t get to see many features that I hadn’t already written about, but I was able to get some insight into how they worked under the hood and how the folks at Adobe were thinking about them.

Same remove tool improvements

To start, the Remove tool received the same updates as the one in Lightroom, and should now be better at removing both an object and any reflections and shadows it may cast. And while the process still uses cloud-based generative AI, Adobe says that they were able to make the model so efficient that the feature doesn’t cost any of the generative credits that come with its plans (which is good news for those using the standard Creative Cloud plan or the inexpensive Photography plans, which only come with a few).

I also got a pro tip from Stephen Nielson, Sr. Director of Product Management of Photoshop, who said that people often try to use the Generative Fill feature to remove unwanted elements from an image, rather than the Remove tool. He doesn’t recommend that. For one, it costs credits, and two, it’s actually not as good at erasing something from an image; you might end up with the problem that most of us are likely familiar with, where you try to get rid of something, only to have it replaced by something else.

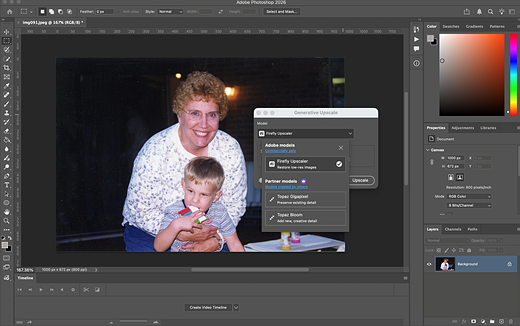

Generative Fill and Partner Models

|

| You can now use Topaz’s Gigapixel upscaling model from right within Photoshop, though doing so will require premium credits. |

With that said, Generative Fill has gotten a big upgrade with the introduction of Partner Models, which let you do image generation not just using Adobe’s own Firefly AI, but also with services like Google’s Gemini 2.5 Flash Image (AKA Nano Banana) model. Nielson says the new models available, including Adobe’s latest-gen one, will now be much better at following prompts, when they couldn’t before (though that didn’t stop users from trying). For example, if you wanted it to change the color of someone’s sweater, or even add a whole new overcoat, Generative Fill is now much better equipped to do so.

While a lot of photographers would rather not use generative AI to alter their images, Partner Models touches on other features as well. For example, if you frequent DPReview’s forums and comments, you’ll likely have seen recommendations for several of Topaz Labs’ AI tools, such as the Gigapixel upscaler and its denoise software. The AI models underpinning those are now available to use in Photoshop, alongside Adobe’s own models, without you having to have a separate subscription for them.

The local selection

|

| The select tool did a pretty decent job with this very complex object (even if it did miss a few very low-contrast spokes and treat the two bikes as one). |

Speaking of AI models, the subject select tool, which lets you cut out an object from an image, has been improved, too. Nielson said this actually came in April, when Adobe added the ability to run the subject recognition process in the cloud, using a more complex model that could do a better job with fine details. Now, though, that model no longer requires the cloud; it can run right on your computer.

I asked Nielson if that was something the Photoshop team was interested in bringing to more AI features, and while he cautioned that not every model could even be run on today’s desktops and laptops, it was definitely something the team considered. “If we can bring a model to run locally, yeah, we’ll do it,” he says.

Jarvis, label my layers

The splashiest feature was likely the Photoshop AI assistant, which I actually didn’t get to see a demo of; it’s currently only available in closed beta and in Photoshop for the Web. The idea is that you’ll have a chat box that you can type commands into that an AI will try to execute within Photoshop. The crowd-pleasing demo shown during the keynote asked it to name all the layers, but Adobe says it’ll be able to make adjustments to how your image looks and even give you feedback on your current edits.

The latter part was, to me, a surprisingly big focus for Adobe. Nielson says the AI isn’t analyzing whether the image or design is good or bad, per se, but rather just looking for ways that it could be better (though it wasn’t exactly clear where the definition of “better” comes from). While he conceded that it could be used as a tool to learn how to do more complex edits or to remind you how to do a specific operation, he says they don’t want it to just be a super-powered help tool. They view the AI more as an automation tool, something that’ll do repetitive tasks that you can’t be bothered with, but with a contextual understanding of your image that you wouldn’t be able to get with, say, Actions or macros. Speaking of, though…

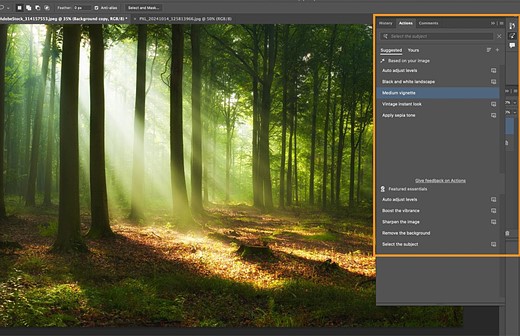

Action-packed

|

| Adobe is carefully reworking the Actions panel. Image: Adobe |

I also got a sneak peek at some of the progress being made to the updated Actions panel, which can also suggest edits to you based on the image you’re working on. According to Pete Green, another Photoshop product manager, it pulls suggestions from a bank of over 500 pre-made actions. The team is also working on revamping the workflow for creating and recording custom actions, letting you add icons and labels to them to make them easier to pick out from the list.

According to Green, there’s been a lot of effort to make sure the actions made by the new system are backwards-compatible, and that all your existing actions should still work. He says the team is being meticulous about the updates it makes, with the knowledge that actions are a long-standing and vital part of many people’s workflows.

What’s next?

Looking at the demos I saw this week, the thing that stood out to me most is that the gap between Lightroom and Photoshop’s purposes has never been wider. Photoshop has become Adobe’s main tool for dealing with imagery, and several of its features may seem like utter anathema to those who care about capturing moments and whatever truths may lie within them. Lightroom, meanwhile, is laser-focused on the needs of photographers, gaining features that I suspect will be quite popular, especially with our audience.

I do think there’s room for a bit more of Photoshop to bleed into Lightroom, though. I was honestly surprised that Lightroom isn’t getting the Topaz partner models, as I can imagine a lot of photographers wanting to use those, especially on older photos. The Lightroom team told me that they haven’t ruled out the possibility of adding them and that customer feedback would be an important part of their considerations.

That raises the question: what would you like to see in Photoshop or Lightroom? Leave a comment down below or in our forums.